Introduction

I recently used the APEX 22.2 Meta Tags feature to add Open Graph tags to my APEX Developer Blogs website. Open Graph meta tags are the tags that allow you to create fancy Tweets like the below:

For more on APEX Meta Tags, check out this post from Plamen Mushkov.

My problem was that the title and card were not displayed when I entered the URL containing Meta Tags into Twitter, LinkedIn, etc.

robots.txt.At this point, I should say that the APEX Developer Blogs website is hosted on the Oracle OCI APEX Service (ATP Lite). I am also using a Vanity URL, which is another important factor.

Read this post from Timo Herwix to find out how to add a vanity URL to your OCI APEX Service or ATP APEX Apps.

What is robots.txt

robots.txt is a text file webmasters use to communicate with web crawlers and other web robots, indicating which parts of a website should not be processed or scanned. This file is placed in the website's root directory and is publicly accessible to provide instructions based on the Robots Exclusion Standard. Here is a link to a Google guide to robots.txt.

You might wonder what robots.txt has to do with Meta Tags on social media sites. Twitter, for example, checks your robots.txt before scanning your URLs. Here is an excerpt from their Cards Getting Started Guide.

The Issue

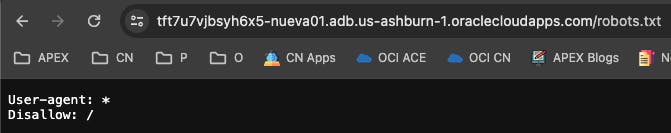

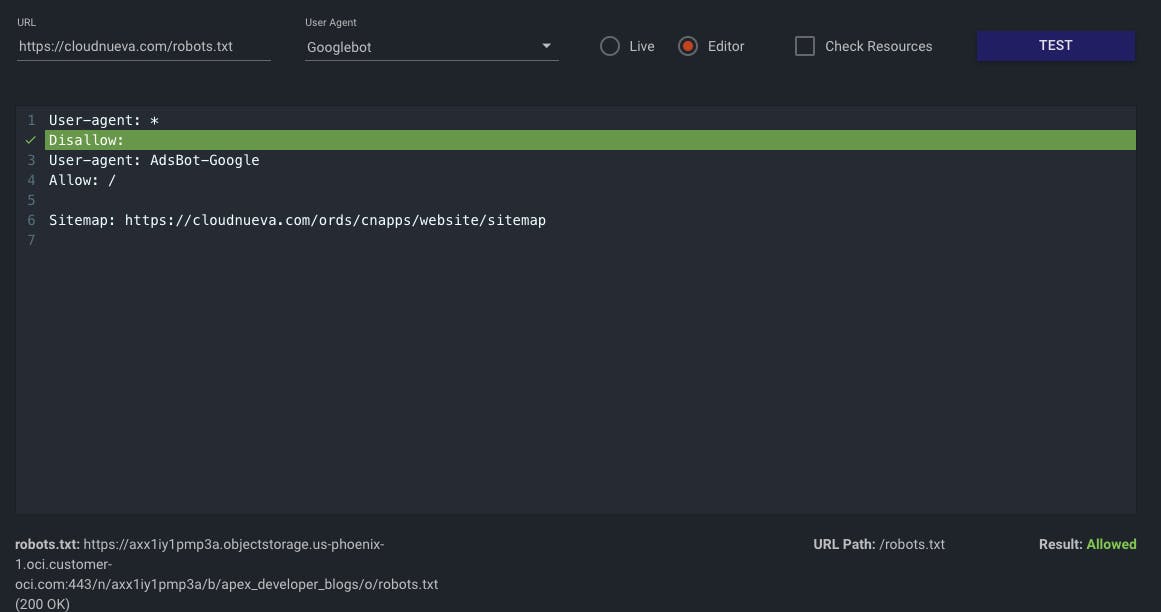

The issue is caused by the default robots.txt served by Oracle OCI, which looks like the one below. This essentially says no one can crawl any websites on this server.

User-agent: *

Disallow: /

Even without Vanity URLs, if you have an Always Free ATP instance, you can see the default robots.txt by adding robots.txt to your Always Free generated URL:

The second part of the issue is that because it is a fully managed solution, you cannot access the webserver to change the robots.txt file.

The Solution

Vlad's Solution

I found the solution in the comments of this idea from the APEX Ideas App. The bullet points below are a direct copy and paste from Vlad Uvarov's response to the idea. Vlad is from the APEX Development team.

So, the possible solutions (some of which were already mentioned in this Idea):

A web server on Compute for the static website, including /robots.txt, or the customer-managed ORDS with its own docroot, fronted by the vanity URL LBaaS. This would be an overkill for just the robots.txt requirement, but customers may also be interested in other benefits these options bring. No extra cost (can use Always Free resources).

An ORDS RESTful module that prints out the desired static or dynamic robots.txt directives, and an LBaaS Rule Set with the 302 URL redirect rule for the /robots.txt path. Crawlers usually follow these 3xx redirects (see here). The same RESTful module can dynamically generate the Sitemap based on APEX dictionary views. No extra cost.

A static robots.txt in a public Object Storage bucket, and an LBaaS Rule Set with the 302 URL redirect. Object Storage comes with a large number of free requests, but even if exceeded, the cost would typically be under $1 per month.

A Web Application Firewall (WAF) rule for Request Access Control, which is applied to the /robots.txt path and performs the HTTP Response Action to return your static robots.txt content as the response body with 200 OK status code. This essentially intercepts and overrides the default /robots.txt. Attach this WAF to your vanity URL LBaaS. No extra cost (but you need to use a Paid account and be within the number of requests limit) and you get all benefits of WAF.

Implementing Bullet 3 Static robots.txt

I will focus on implementing option three, which I saw as the simplest solution (in my opinion). This solution depends on having the Vanity URL configured and access to the OCI Load Balancer on which the Vanity URL is configured.

1 - Create robots.txt

Using any text editor, create a file called robots.txt. Follow this Google Guide to find out more about the specifications.

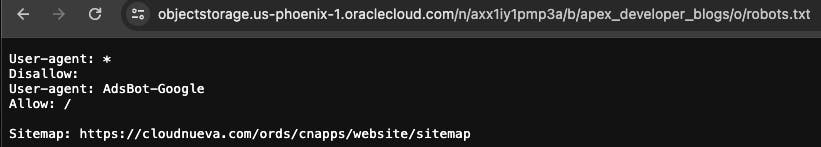

TL;DR, my robots.txt now looks like this. It is saying that anyone can crawl my site.

User-agent: *

Disallow:

User-agent: AdsBot-Google

Allow: /

Sitemap: https://cloudnueva.com/ords/cnapps/website/sitemap

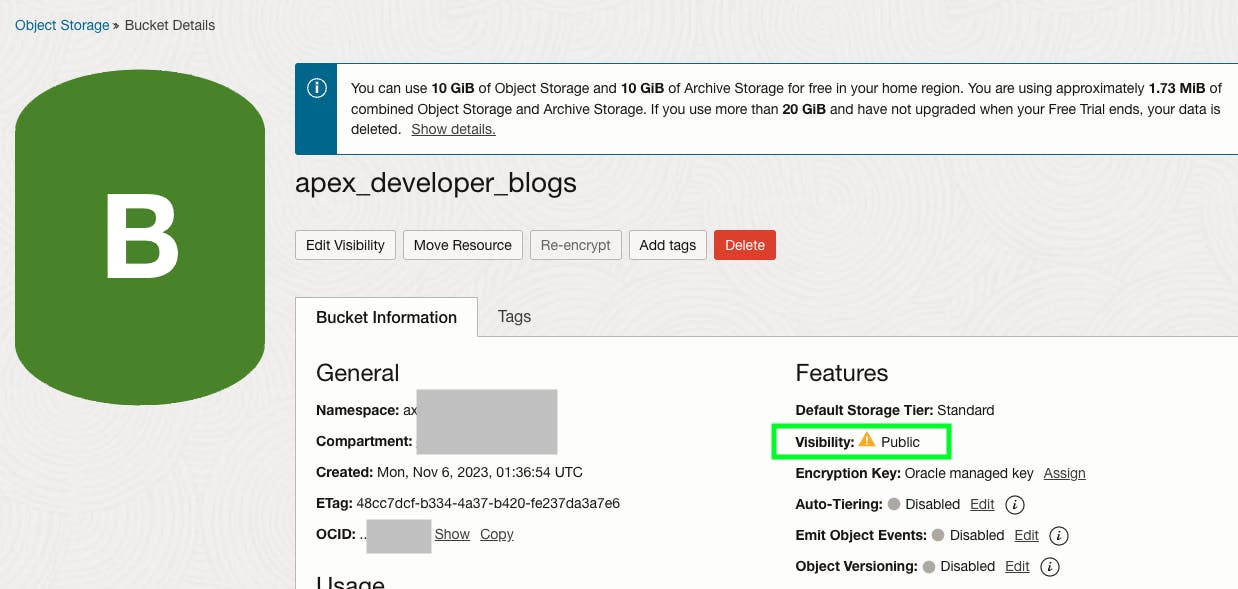

2 - Upload robots.txt to a Public OCI Bucket

Create a Public Bucket in OCI:

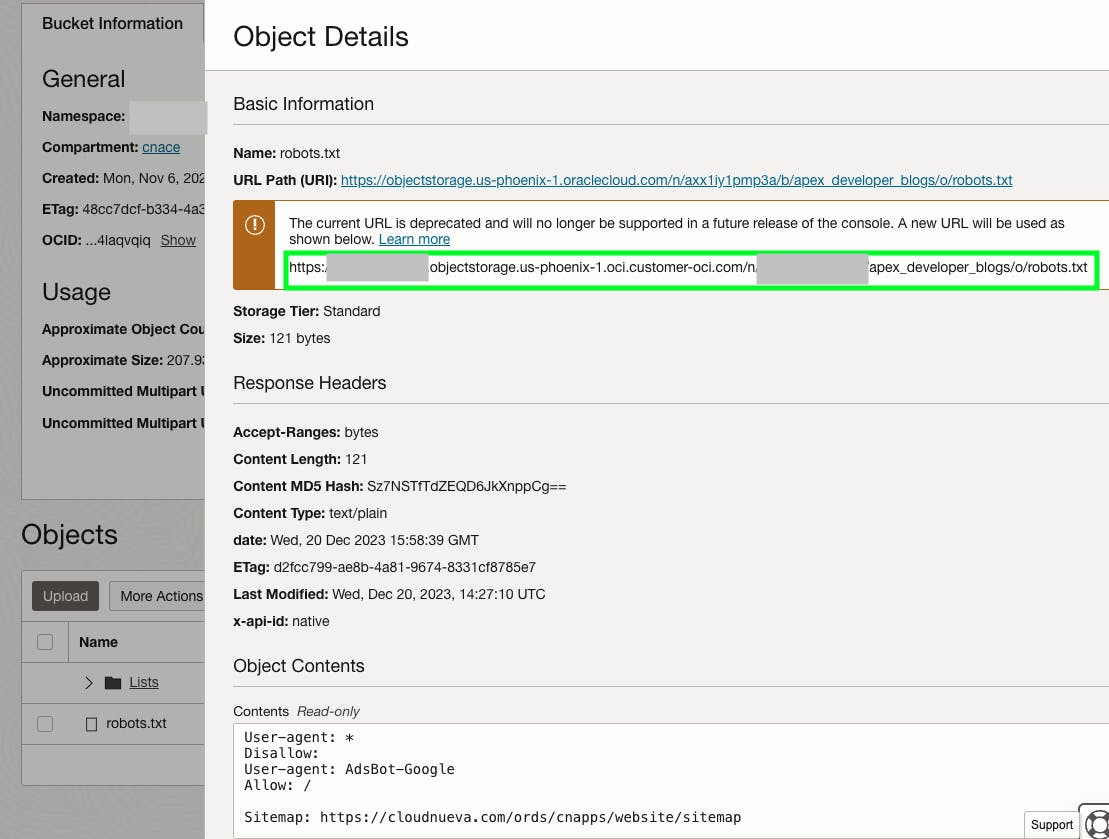

Upload your robots.txt to the bucket and save the URL somewhere:

3 - Add a Re-Direct to the Load Balancer

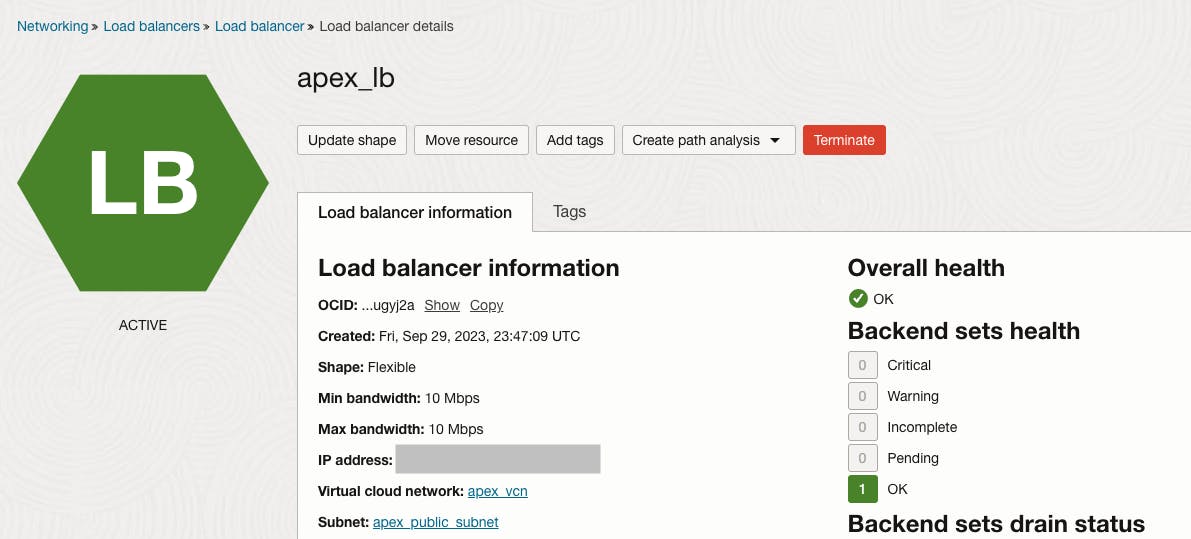

From the OCI console, access the Loadbalancer where your Vanity URL is configured:

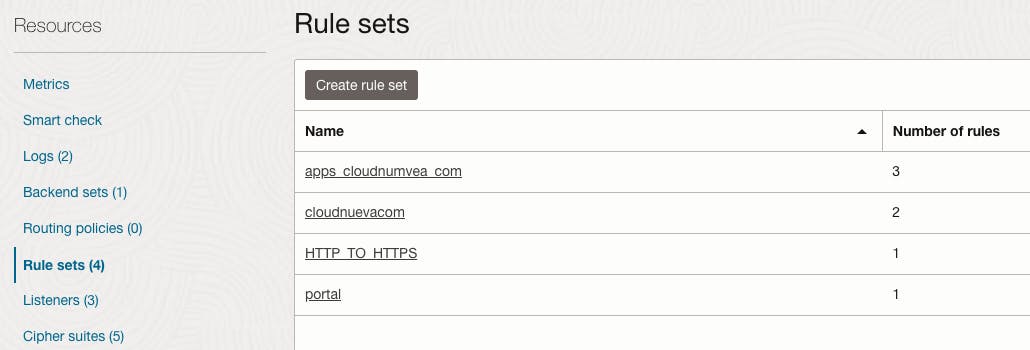

Then click the 'Rule sets' link to view the Rule sets you have configured.

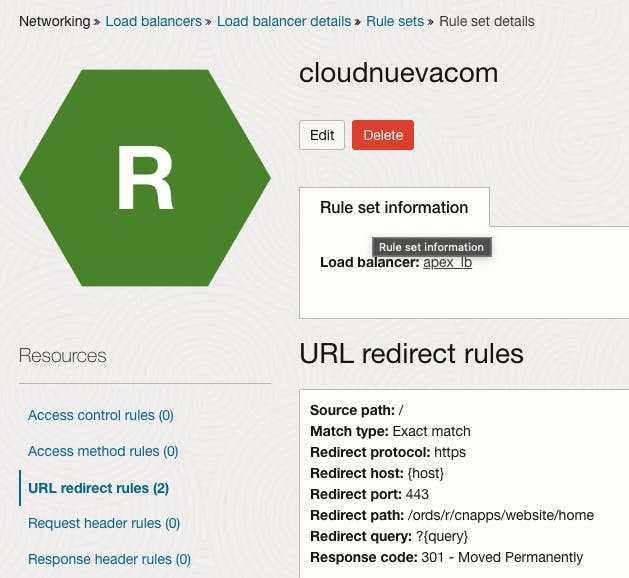

After selecting the appropriate Rule set, click on the 'URL redirect rules' link:

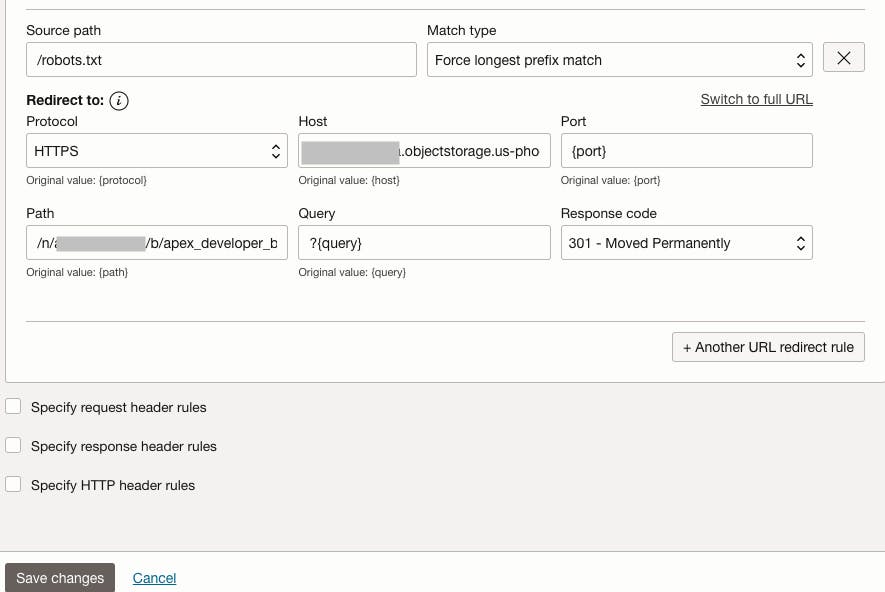

Click Edit, and add the below rule:

This rule tells the Load Balancer to redirect requests to yourdomain.com/robots.txt to your static file hosted in your OCI bucket.

Note that the 'Hostname' and the 'Path' are broken into two parts in the rule.

Note: If you have subdomains, e.g., apps.cloudnueva.com, then you need to set up redirect rules for them also.

4 - Test Your robots.txt

If all is well, you should be able to access https://yourdomin.com/robots.txt and be re-directed to your robots.txt file. For example, https://cloudnueva.com/robots.txt

Other Testing Tools

Here are some other tools that you may find useful.

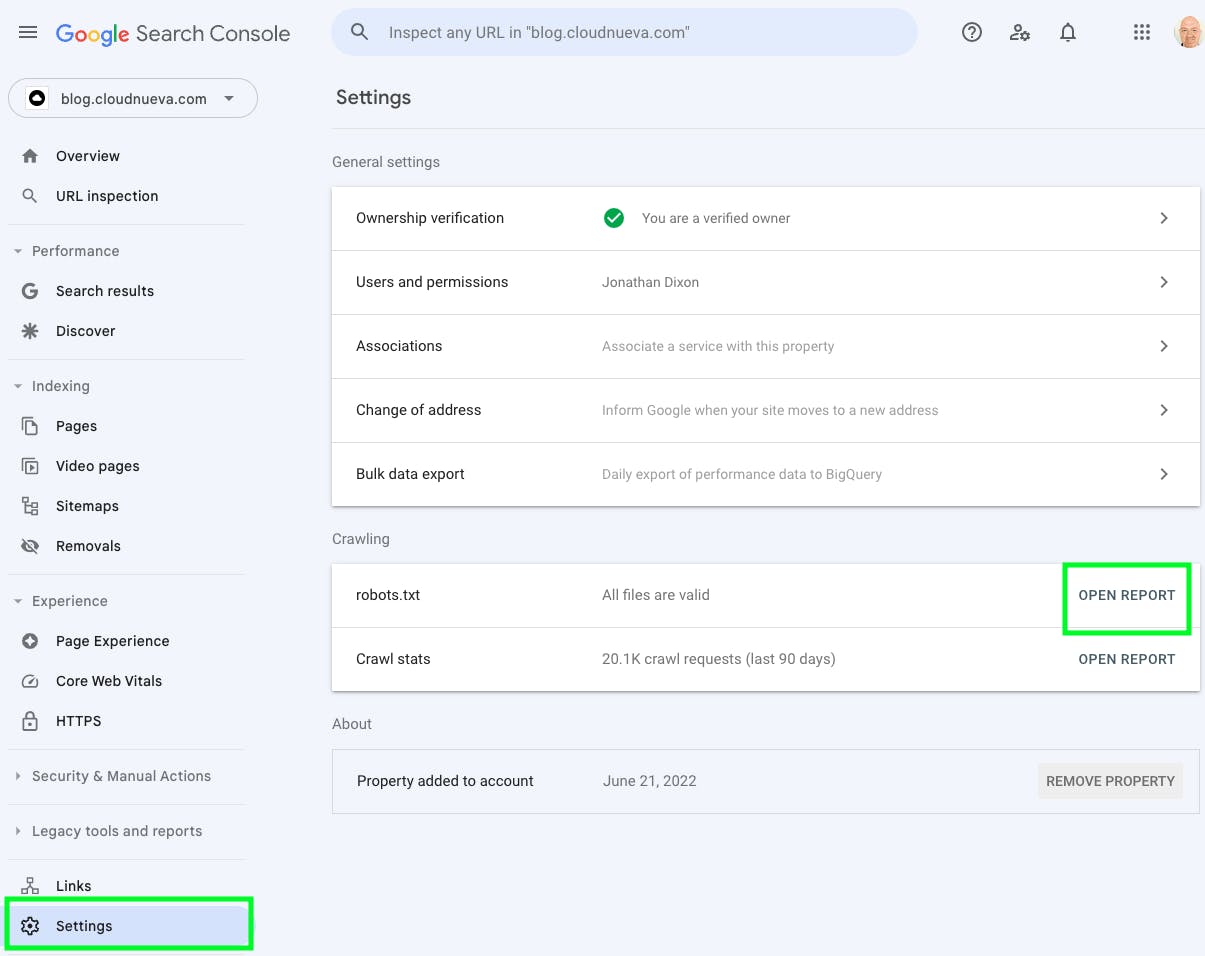

Google Search Console

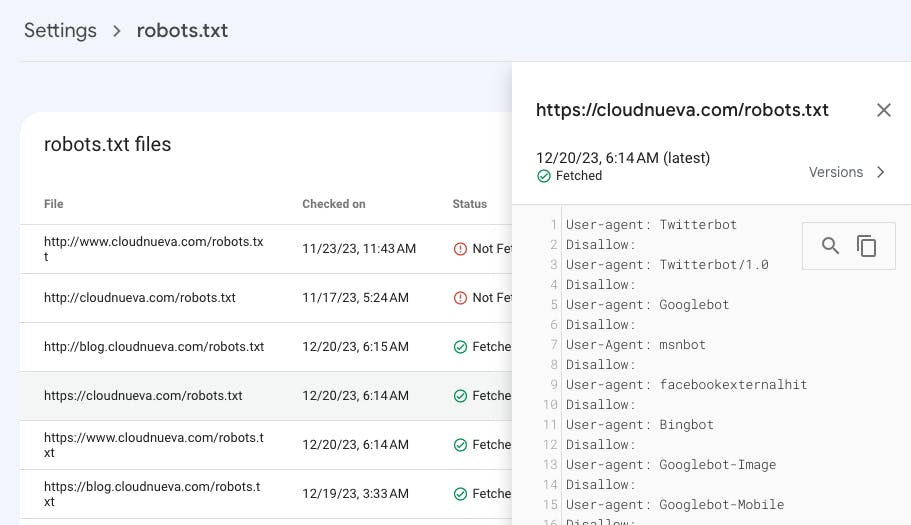

If you are using the Google Search Console, then verify that Google can read your robots.txt and see what value it has for it. Sign in to the Google Search Console and click Settings, then Open the robots.txt report.

Click on a specific file to see what Google thinks is in your robots.txt file. You can also submit a request for Google to recheck the robots.txt from here.

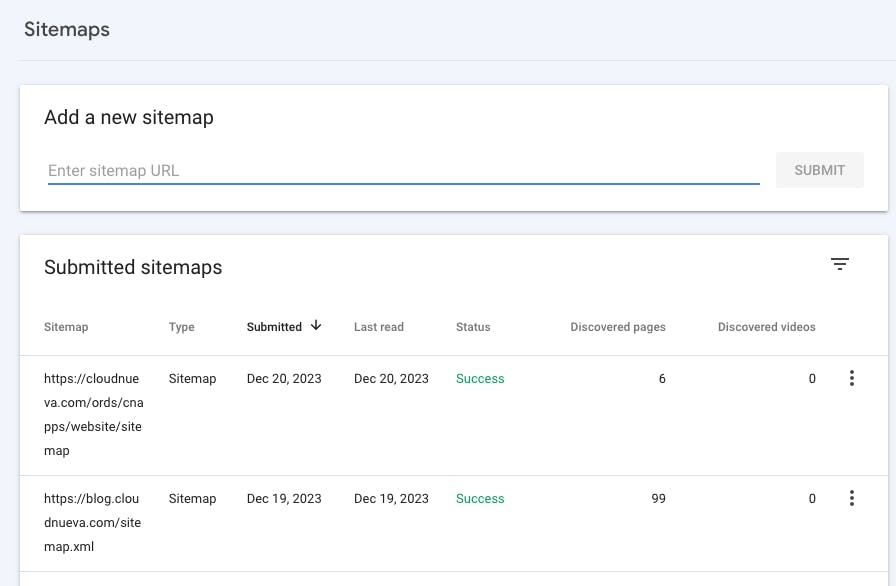

Another side effect of Google not being able to see the correct robots.txt file is that Google also won't read your sitemap. Once you have added your new robots.txt, verify and resubmit your sitemap under the 'Sitemaps' menu option.

Other Testing Tools

Various SEO websites have free tools that allow you to check that certain agents can access your robots.txt, e.g., https://technicalseo.com/tools/robots-txt/

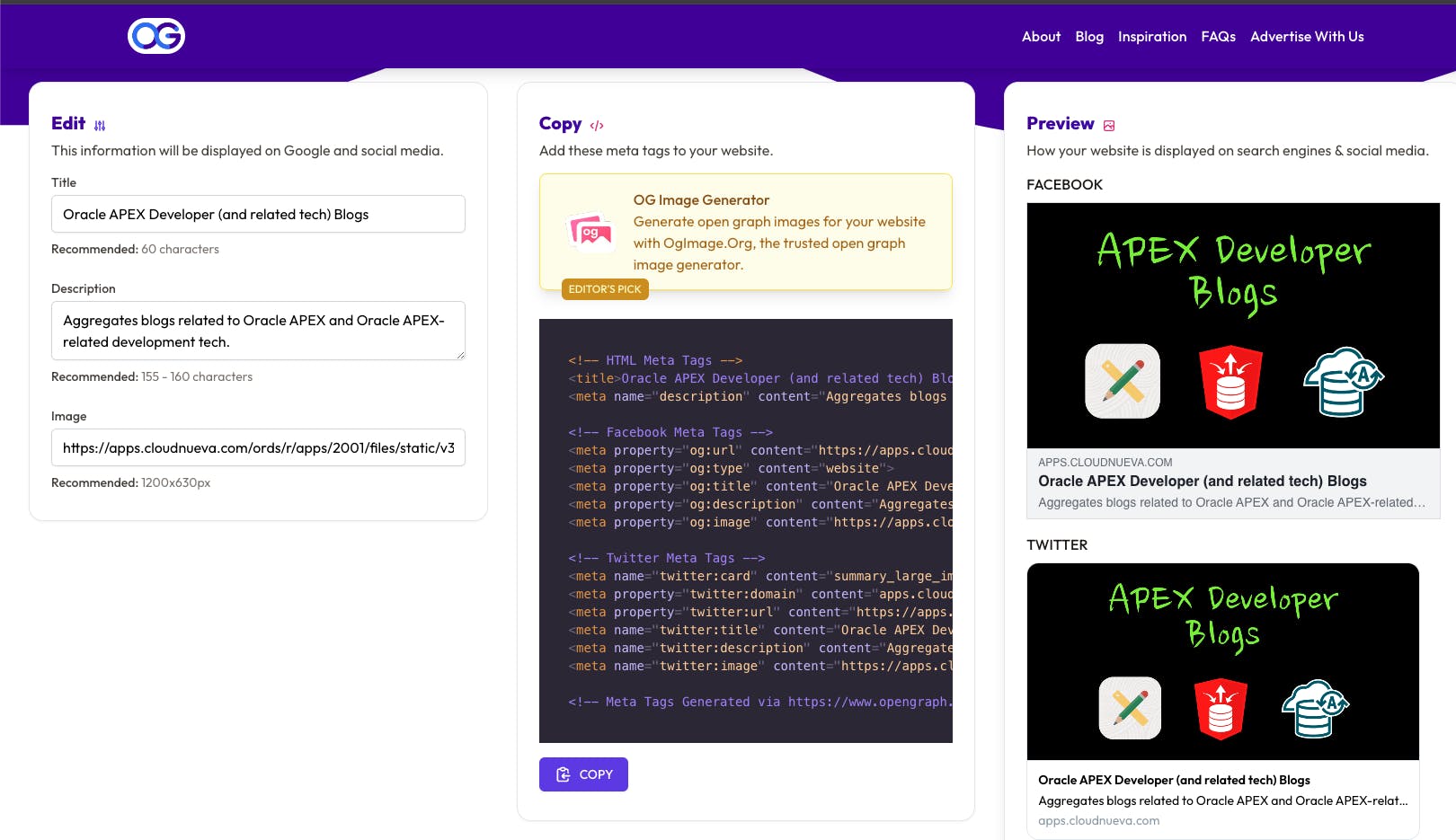

Test Your Social Posts

Finally, back to the point of this post. Now that we have fixed our robots.txt, we should be able to see our fancy Meta Tag-driven cards when we post on Twitter and LinkedIn. Open Graph has a nice little tool that not only tests your Meta Tags but also previews what your posts would look like on various platforms. You can access the tool by following this link.

Conclusion

I accept this post as a bit of an edge case but as APEX continues to gain in popularity I fully expect people to start hosting APEX-based websites where SEO is important to them. Given this, we need to make sure APEX sites can match those created by other content creation platforms.

Ideally, this APEX Ideas App idea is taken seriously by the APEX Development team and they make it easier to deal with robots.txt (and sitemaps for that matter).