Table of contents

Introduction

Before I start, I should say the APEX_JSON PL/SQL API (available since APEX 5.0) is one of the most useful APIs the APEX development team have provided. I have used APEX_JSON to generate and parse JSON on countless occasions. It served me well through the 11g database years. Since the last of my clients moved off 11g, however, I have managed to wean myself off APEX_JSON (mostly). The main reason being performance. I still think APEX_JSON is easier to understand and is more developer friendly. It's just a lot slower than the native JSON functionality available since 12c.

In this blog post, I'll demonstrate exactly how much faster native database JSON functionality is. Both in generating JSON but more spectacularly in parsing JSON.

Test Environment

All these tests were run on a 21c ATP instance running on the OCI Always Free Tier. Your timings will almost certainly be better in a production environment.

Parsing JSON

Test Data

For this test, I built a JSON document containing 30,000 items (and their attributes). The resulting file is just over 10mb in size. I generated the JSON document using APEX Data Generator (available in APEX 22.1) which I recommend you check out. This is not a massive JSON document, but large enough to be meaningful. To eliminate network from the timings, I loaded the JSON document into a CLOB in a one row database table. Here is an excerpt showing 1 record from the JSON document:

{

"ITEMS": [{

"item_id": "36",

"item_number": "OG8RXZ4N12",

"description": "Winter Gloves (Green) (12 Pack)",

"country_of_mfg": "United Kingdom",

"channel": "ONLINE",

"item_uom": "EA",

"date_introduced": "27-NOV-2021",

"status": "ACTIVE",

"orderable": "Y"

}]

}

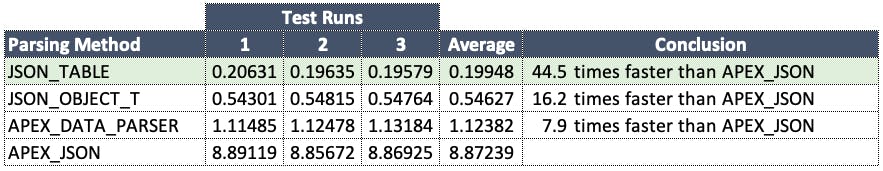

Tests

I compared the performance of APEX_JSON vs two native database JSON parsing APIs, JSON_TABLE and JSON_OBJECT_T. I used the following code blocks to generate the timings. I ran each code block three times and took the average to calculate the percentage improvements.

Update 6/8/2022 - After some discussion on Twitter, I added an additional test using APEX_DATA_PARSER. I used the same JSON stored in a local table but in BLOB not a CLOB column. As confirmed by Carsten, APEX_DATA_PARSER uses JSON_TABLE behind the scenes (when available).

APEX_JSON

DECLARE

l_json_clob CLOB;

l_count PLS_INTEGER;

l_item_id items.item_id%TYPE;

BEGIN

SELECT json_clob INTO l_json_clob FROM test_json_parsing WHERE json_id = 5;

-- Parse the JSON CLOB.

apex_json.parse(l_json_clob);

-- Count the Records Parsed.

l_count := apex_json.get_count(p_path => 'ITEMS');

-- Loop through parsed records, getting the item_id.

FOR i IN 1..l_count LOOP

l_item_id := apex_json.get_number (p_path => 'ITEMS[%d].item_id', p0 => i);

END LOOP;

END;

JSON_TABLE

DECLARE

CURSOR cr_json_table IS

SELECT jt.*

FROM test_json_parsing tjp

, JSON_TABLE(tjp.json_clob, '$.ITEMS[*]'

COLUMNS (item_id NUMBER PATH '$.item_id',

item_number VARCHAR2(50) PATH '$.item_number',

description VARCHAR2(100) PATH '$.description',

country_of_mfg VARCHAR2(100) PATH '$.country_of_mfg',

channel VARCHAR2(20) PATH '$.channel',

item_uom VARCHAR2(10) PATH '$.item_uom',

date_introduced VARCHAR2(12) PATH '$.date_introduced',

status VARCHAR2(10) PATH '$.status',

orderable VARCHAR2(1) PATH '$.orderable')) jt

WHERE tjp.json_id = 5;

l_count PLS_INTEGER := 0;

l_item_id items.item_id%TYPE;

BEGIN

-- Open the cursor which will use JSON_TABLE to Parse the CLOB.

FOR r_rec IN cr_json_table LOOP

l_item_id := r_rec.item_id;

l_count := l_count + 1;

END LOOP;

END;

JSON_OBJECT_T

DECLARE

l_items_object JSON_OBJECT_T;

lt_items JSON_ARRAY_T;

lr_item_rec JSON_OBJECT_T;

l_json_clob CLOB;

l_count PLS_INTEGER := 0;

l_item_id items.item_id%TYPE;

BEGIN

-- Get the CLOB.

SELECT json_clob INTO l_json_clob FROM test_json_parsing WHERE json_id = 5;

-- Parse the JSON CLOB.

l_items_object := JSON_OBJECT_T.PARSE(l_json_clob);

-- Get the ITEMS Array.

lt_items := l_items_object.get_Array('ITEMS');

-- Get the Record Count.

l_count := lt_items.get_size;

-- Loop through the items and get the item_id for each.

FOR i IN 0..l_count -1 LOOP

lr_item_rec := JSON_OBJECT_T(lt_items.get(i));

l_item_id := lr_item_rec.get_String('item_id');

END LOOP;

END;

APEX_DATA_PARSER

DECLARE

CURSOR cr_items IS

SELECT col001 status

, col002 channel

, TO_NUMBER(col003) item_id

, col004 item_uom

, col005 orderable

, col006 description

, col007 item_number

, col008 country_of_mfg

, col009 date_introduced

FROM apex_data_parser.parse

(p_content => (SELECT json_blob FROM test_json_parsing WHERE json_id = 5),

p_file_name => 'test.json',

p_row_selector => 'ITEMS',

p_detect_data_types => 'N');

l_count PLS_INTEGER := 0;

l_item_id items.item_id%TYPE;

BEGIN

-- Loop through parsed records, getting the item_id.

FOR r_rec IN cr_items LOOP

l_item_id := r_rec.item_id;

l_count := l_count + 1;

END LOOP;

END;

Timings

There is not much I can add here, the timings say it all. Native database JSON parsing offers a massive performance improvement over APEX_JSON.

Building JSON

Test Data

I took the JSON document used in the first test (with 30,000 records) and loaded it into a table called ITEMS.

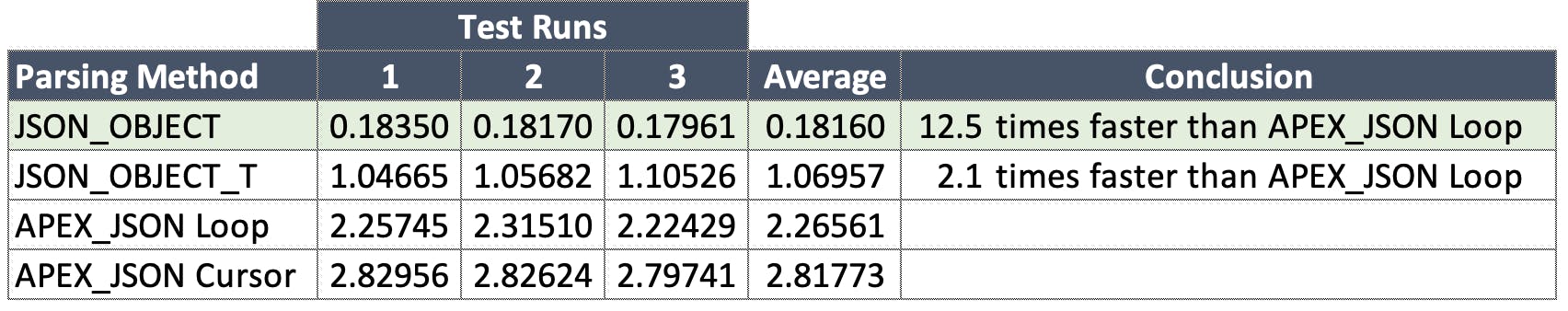

Tests

The goal of this test is to generate a JSON document from the ITEMS table. The JSON file will have the same structure and content as the original test JSON. I compared the performance of APEX_JSON using a cursor vs APEX_JSON in a LOOP vs the native database JSON APIs JSON_TABLE and JSON_OBJECT_T. I used the following code blocks to generate the timings. I ran each code block three times and took the average to calculate the percentage improvements.

Generate JSON using APEX_JSON in Loop

DECLARE

CURSOR cr_items IS

SELECT *

FROM items;

l_json_clob CLOB;

BEGIN

-- Initialize JSON

apex_json.initialize_clob_output (p_indent => 0);

apex_json.open_object; -- {

apex_json.open_array('ITEMS'); -- [ ITEMS

FOR r_item IN cr_items LOOP

apex_json.open_object; -- {

apex_json.write('item_id', r_item.item_id);

apex_json.write('item_number', r_item.item_number);

apex_json.write('description', r_item.description);

apex_json.write('country_of_mfg', r_item.country_of_mfg);

apex_json.write('channel', r_item.channel);

apex_json.write('item_uom', r_item.item_uom);

apex_json.write('date_introduced', r_item.date_introduced);

apex_json.write('status', r_item.status);

apex_json.write('orderable', r_item.orderable);

apex_json.close_object; -- }

END LOOP;

apex_json.close_array; -- ] ITEMS

-- Close out JSON and return CLOB.

apex_json.close_object; -- }

l_json_clob := apex_json.get_clob_output;

apex_json.free_output;

END;

Generate JSON using APEX_JSON with Cursor

DECLARE

crsr sys_refcursor;

l_json_clob CLOB;

BEGIN

-- Initialize JSON

apex_json.initialize_clob_output (p_indent => 0);

apex_json.open_object; -- {

-- Open Cursor and Pass results to APEX_JSON.

OPEN crsr FOR

SELECT * FROM items;

apex_json.write('ITEMS', crsr);

-- Close out JSON and return CLOB.

apex_json.close_object; -- }

l_json_clob := apex_json.get_clob_output;

apex_json.free_output;

END;

Generate JSON using JSON_OBJECT_T

DECLARE

CURSOR cr_items IS

SELECT * FROM items;

l_items_obj json_object_t := json_object_t();

lr_item_rec json_object_t := json_object_t();

lt_items json_array_t := json_array_t ();

l_json_clob CLOB;

BEGIN

-- Loop through items adding them to array.

FOR r_item IN cr_items LOOP

lr_item_rec.PUT('item_id', r_item.item_id);

lr_item_rec.PUT('item_number', r_item.item_number);

lr_item_rec.PUT('description', r_item.description);

lr_item_rec.PUT('country_of_mfg', r_item.country_of_mfg);

lr_item_rec.PUT('channel', r_item.channel);

lr_item_rec.PUT('item_uom', r_item.item_uom);

lr_item_rec.PUT('date_introduced', r_item.date_introduced);

lr_item_rec.PUT('status', r_item.status);

lr_item_rec.PUT('orderable', r_item.orderable);

lt_items.APPEND (lr_item_rec);

END LOOP;

l_items_obj.PUT ('ITEMS', lt_items);

l_json_clob := l_items_obj.to_clob;

END;

Generate JSON using JSON_OBJECT

DECLARE

l_json_clob CLOB;

BEGIN

l_start_ts := SYSTIMESTAMP;

SELECT JSON_OBJECT (KEY 'ITEMS' VALUE (

SELECT JSON_ARRAYAGG(JSON_OBJECT (*) RETURNING CLOB)

FROM ITEMS) RETURNING CLOB

) INTO l_json_clob

FROM DUAL;

END;

Timings

Another win for native database JSON functionality. Although, if you have to build your JSON using PL/SQL and a loop then the timings are much closer.

I was somewhat surprised that with APEX_JSON, it was faster to build the JSON in a loop rather than using the cursor approach.

I was somewhat surprised that with APEX_JSON, it was faster to build the JSON in a loop rather than using the cursor approach.

Conclusion

If you are on 12c or higher of the database, you should consider switching to use native JSON parsing as soon as you can. Since 12c, Oracle has enhanced native JSON capabilities. They are much more robust and feature rich from 19c onward. If you are on 19c or higher then make the switch from APEX_JSON to native JSON today!